What kind of mask should I be wearing to protect against COVID-19?

By Brent Stephens on August 13, 2020

November 10, 2020 Update: this post has been updated to incorporate new literature and resources and several helpful suggestions from colleagues. Updates are noted throughout.

We’ve been on an unnecessarily confusing roller coaster ride in the United States in the last few months regarding the role that masks and face coverings can play in slowing or stopping the transmission of COVID-19.

We’ve gone from the CDC telling the public not to wear masks in March 2020, to some public understanding of the idea that “I wear a mask to protect you, not me!“, to now where I think we should have been all along, which is: “my mask protects you, and might also protect me, so we should both wear masks.”

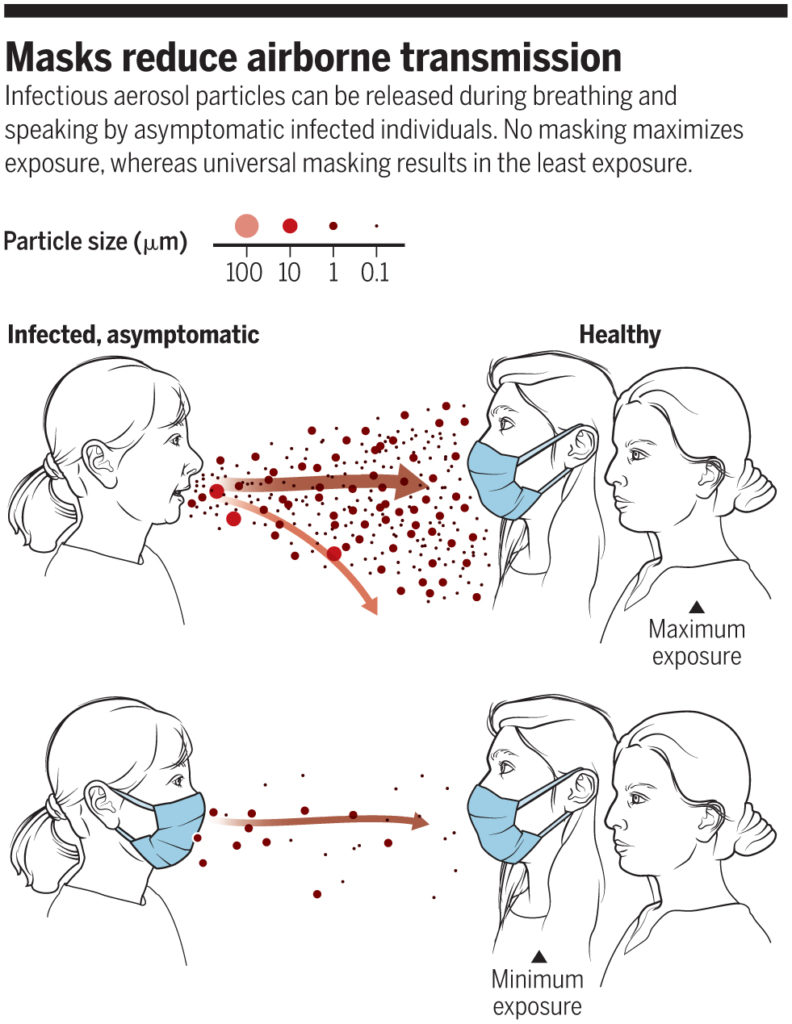

The reasoning behind this latter thinking was outlined well in a perspective piece in Science in June 2020:

“No masking maximizes exposure, whereas universal masking results in the least exposure” (Prather et al., 2020)

In fact, universal masking is now thought to be key to stopping the transmission of COVID-19, and the CDC now acknowledges that the widespread use of masks (or “face coverings” — I’ll primarily use “masks” for simplicity*) can “slow and stop the spread of the virus — particularly when used universally within a community setting.”

Now, as the country ventures into reopening K-12 schools and universities, the question I’ve been getting a lot lately is: “What kind of mask should I be wearing?”

I’ve asked the same question to myself numerous times, and I have found the landscape on masks confusing and difficult to generalize.

Fortunately, numerous outlets have synthesized a lot of useful information already. FiveThirtyEight had an early summary of masks, including the basic concepts and the impacts of fabric materials, layers, and vents (the answer on the latter: don’t use vents because although they can protect you from others, they expel your own unfiltered respiratory emissions, which presents a potential hazard to others if you happen to find yourself among the many symptomatic, asymptomatic, or pre-symptomatic hosts of SARS-CoV-2).

More recently, Wirecutter published a helpful article on selecting cloth masks, which interviewed over 20 subject matter experts and focused especially on fit, comfort, and breathability, with some information on particle filtering effectiveness. And Emily Oster and Galit Alter at COVID Explained have a short, helpful primer on masks. Additionally, new test data continue to be published in a variety of outlets. I’m sure you’ve seen the headlines.

By now, I think there are some lessons to be learned that can help guide your mask selection based on factors that are known to influence their effectiveness and performance, which I will summarize here.

What do masks do?

One way that masks can help prevent infected individuals from infecting others is that they reduce the velocity of air expelled during breathing, talking, sneezing, and/or coughing. Reducing the velocity of air expelled from respiratory activities means that the expelled air won’t travel as far, and that’s a good thing because it means that virus-containing respiratory droplets hitching a ride on the expelled air shouldn’t travel as far either.

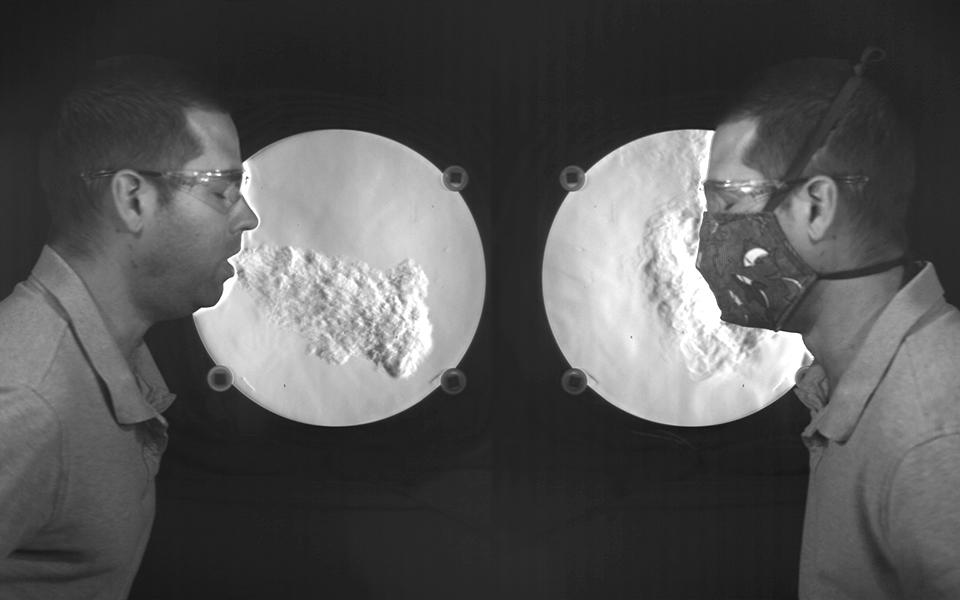

This phenomenon has been demonstrated in recent years through mock-up experiments and Schlieren imaging:

A visualization from NIST illustrates airflow when coughing, but it does not show the movement of virus particles (Staymates, 2020)

Masks can also filter respiratory particles of various sizes. And particle filtration can work in two directions: filtering particles during exhalation (which protects others) and filtering particles during inhalation (which protects yourself).

Depiction of aerosol filtration efficiency mechanisms for a fabric mask material (Konda et al. 2020)

Particle filtration efficiency, or the ability of a mask to filter airborne particles, often depends on a lot on particle size and a lot on mask material.

And the overall filtration efficiency of a mask depends on both the particle filtration efficiency and how well the mask fits (and thus how much unfiltered air flows around the mask).

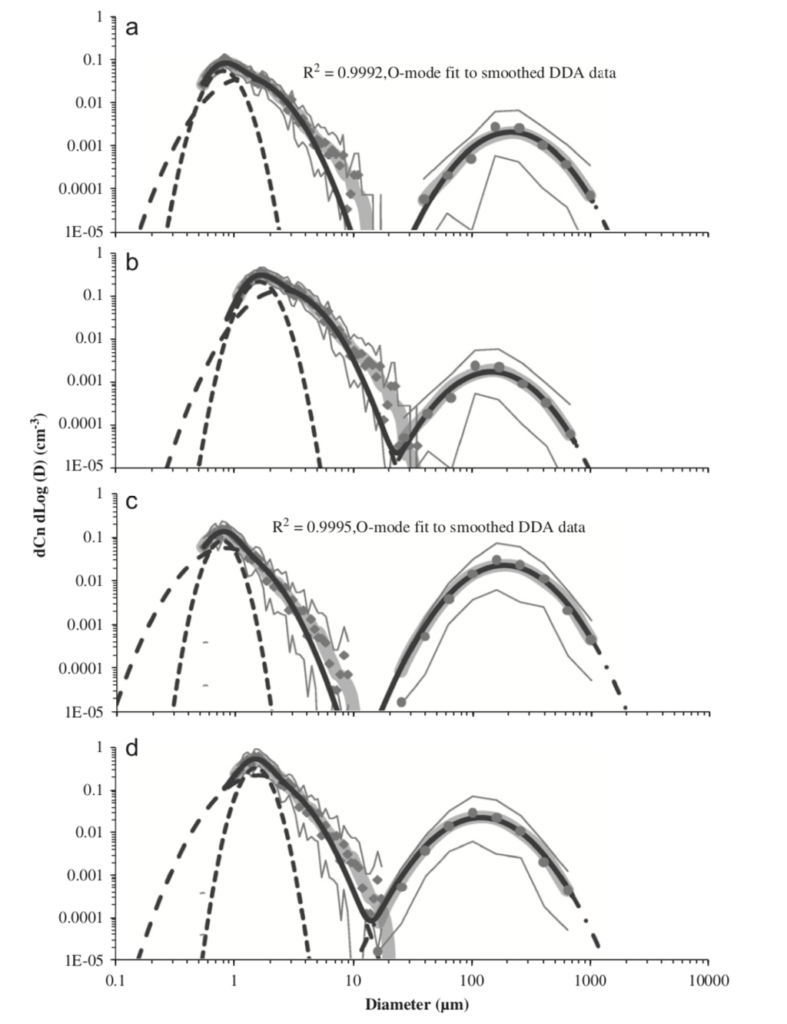

The combination of these factors means that when respiratory particles containing the virus are expelled from individuals wearing an effective mask, there won’t be as many particles expelled beyond the mask and they won’t travel as far, and that’s a good thing:

Masks with additional layers of fabric will reduce the number and transport distance of respiratory droplets (Bahl et al., 2020)

Similarly, if an individual is wearing a mask and breathing air laden with airborne particles containing the infectious virus, then their mask can filter out a fraction of those particles, reducing their own exposure.

But, as mentioned, this filtration ability, and therefore overall mask effectiveness, can depend a lot on particle size.

Why does particle size matter?

The importance of particle size — such a seemingly small detail (pun intended) — in the transmission COVID-19 has been a hotly debated topic in the last several months.

The key issue in this debate is whether SARS-CoV-2 (and thus COVID-19) is transmitted primarily through small aerosols, large droplets, or touching surfaces (or some combination of the three).

Organizations like the WHO and CDC maintained for months that SARS-CoV-2 was primarily transmitted through large droplets that travel only short distances (less than 1 or 2 meters) where they can deposit directly onto the eyes, mouth, or nose of others and/or deposit on nearby surfaces (“fomites”) that are subsequently touched by others who then touch their eyes, mouth, or nose. Both organizations have considered transmission by larger particles (“droplets” in their nomenclature, conventionally meaning larger than 5 or 10 µm in diameter) to be dominant and transmission by smaller particles (“aerosols” in their nomenclature, conventionally meaning smaller than 5 or 10 µm in diameter) to be negligible.

While this way of thinking has dominated for most of this pandemic, there is also an increasing recognition of the importance that small aerosols likely play in transmission. In fact, so much recognition that the WHO updated their position in July 2020 to recognize the potential for airborne transmission via smaller aerosols. [November 2020 Update: the CDC similarly updated their position to include transmission by smaller aerosols in October 2020]

They updated their position in part because two leading researchers on respiratory emissions and modes infectious disease transmission — Lidia Morawska and Don Milton — published an open letter appealing to the medical community at large to recognize the likelihood of airborne transmission of COVID-19 through small aerosols. The letter was backed by 239 signatories, largely from the aerosol science community (including myself). (The infectious disease community has now published their own letter with over 300 signatories warning that epidemiological data and clinical experiences in healthcare settings continue to support that the main mode of transmission is “short range through droplets and close contact.” So the “debate” continues, but I think it has more to do with how the two communities define “airborne” than anything else.)

From my perspective, we know that a wide range of particle sizes are emitted during human respiratory activities, including very small particles (conventionally called “aerosols” in medical communities) that can travel long distances and stay suspended in air for long periods of time, as well as very large particles (conventionally called “droplets” in medical communities) that don’t travel very far and don’t remain suspended in air for very long. The definition of “aerosols” versus “droplets” has long centered on an arbitrary cut-off of 5 or 10 µm in size. The reality of respiratory emissions is broader and more complex.**

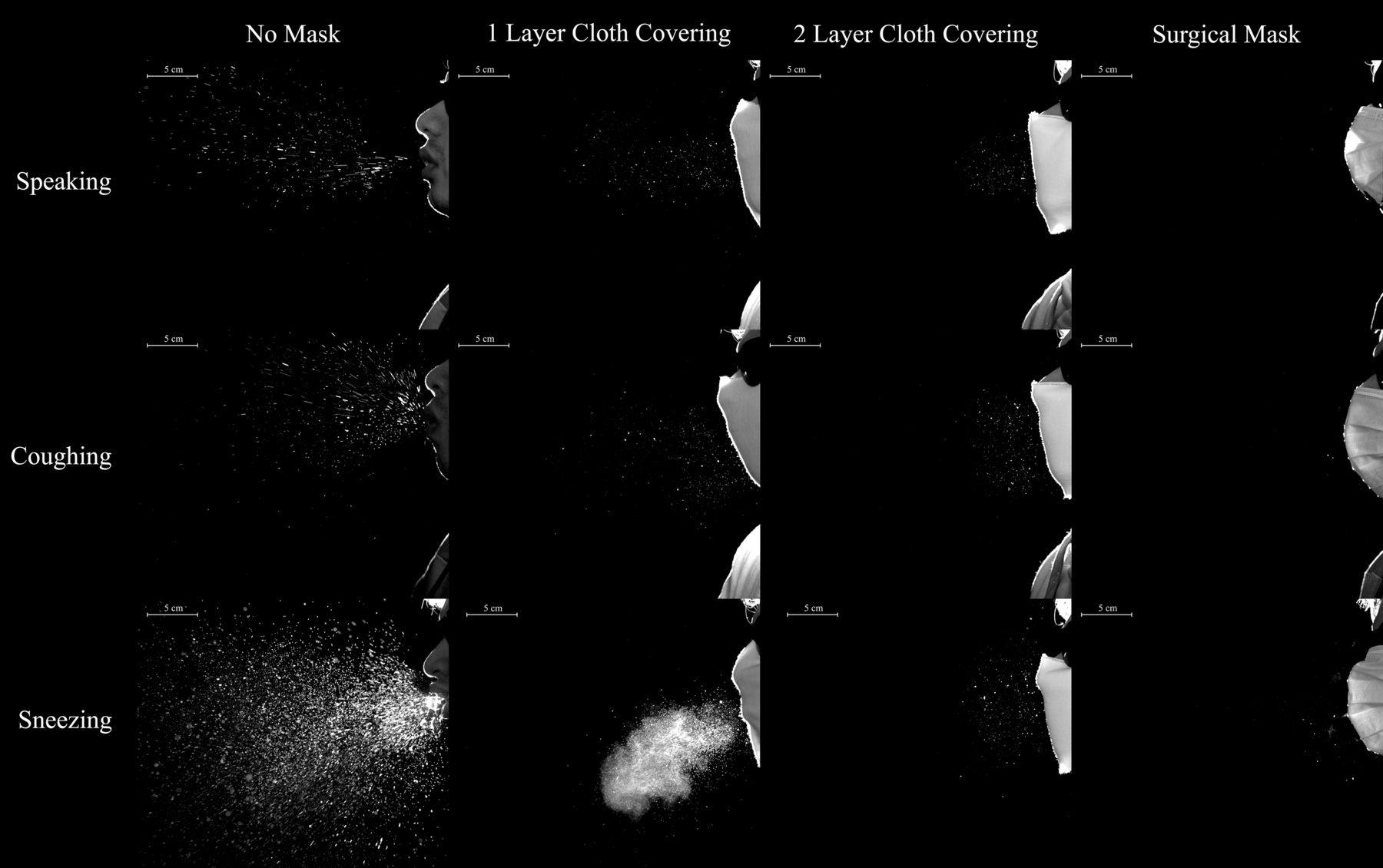

In one of the most comprehensive studies of respiratory emissions of which I am aware, researchers demonstrated two distinct distributions of human respiratory emissions generated during activities like speaking and coughing, organized chiefly by particle size, including one part of the distribution with an average diameter of ~0.5 to ~2 µm and another part of the distribution with an average diameter of ~50 to ~300 µm:

Particle size distributions resulting from (a) speaking (uncorrected data), (b) speaking (corrected data), (c) voluntary coughing (uncorrected data), and (d) voluntary coughing (corrected data) (Johnson et al., 2011)

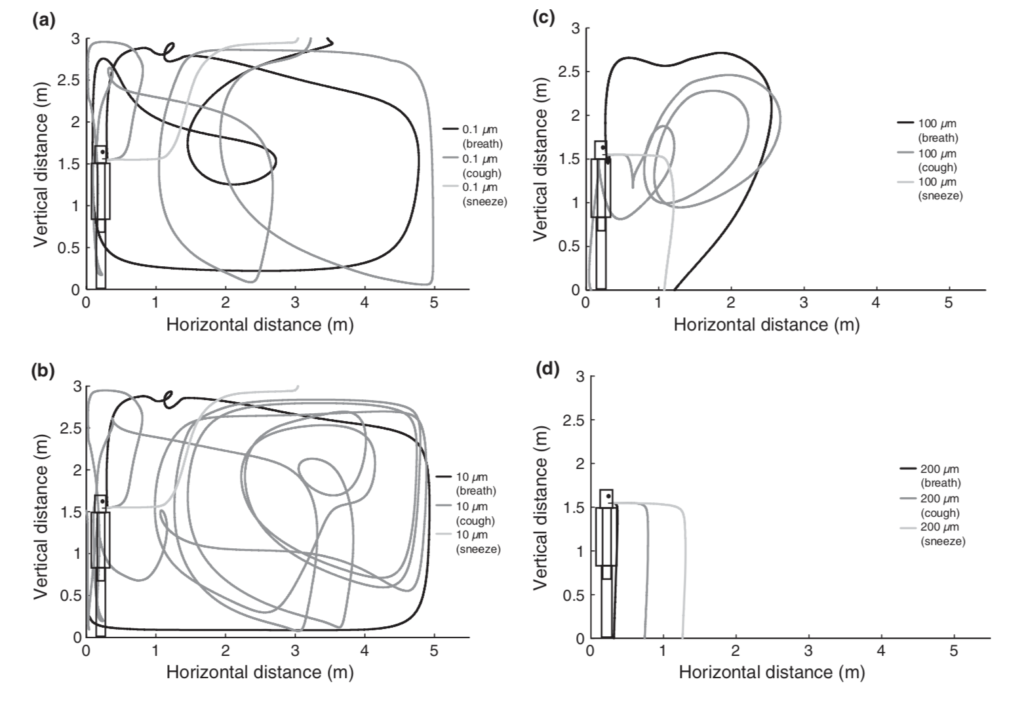

Particles in the smaller distribution exist in the respirable “fine particle” size range, which can easily remain suspended in air for minutes to hours in poorly ventilated spaces. Particles in the larger distribution exist as ballistic droplets that fall to the ground rapidly.

Particles near the arbitrary cut-offs of 5 or 10 µm (and smaller) can also remain suspended in air for at least several minutes and can easily mix within a room, contrary to the conventional “droplet” definition in medical communities:

Simulations of droplet trajectories for initial diameters of (a) 0.1 µm, (b) 10 µm, (c) 100 µm, and (d) 200 µm (Chen and Zhao, 2010)

We’ve also learned from recent research on other respiratory viruses, including influenza, that many respiratory viruses are commonly found in the smaller aerosol size ranges in isolated studies of human respiratory activities as well as in samples collected from various indoor environments with infected individuals present, including in particle size fractions smaller than 5 or 10 µm (and even smaller than 1 µm). While the actual viruses in question are only perhaps ~60 nm to ~140 nm in size (i.e., ~0.06 to ~0.14 µm), they exist in the environment, either in air or on surfaces, encapsulated in respiratory fluid and thus in particles larger than their own size alone.

Similarly, and more recently, we’ve also learned that SARS-CoV-2 genetic material has been found in both aerosol (< 10 µm) and surface samples in hospitals with COVID-19 patients, including in Singapore, Wuhan (China), and Nebraska. While these previous studies reported the detection of viral RNA in aerosol and surface samples, they did not assess viability (but viability has long been assumed likely based on what we know about viability in aerosols and on surfaces via controlled experiments).

It was only in early August 2020 that we learned of the detection of viable, infectious SARS-CoV-2 in aerosol samples from a hospital at the University of Florida, including nearly 5 meters (nearly 16 feet) from COVID-19 patients. Granted, we don’t know if these viral concentrations were high enough to actually get someone sick, but finding viable SARS-CoV-2 in aerosols over 15 feet away from patients in highly engineered, well-ventilated, and well-filtered hospital settings does not exactly give me confidence that COVID-19 is transmitted only through large ballistic droplets that land on someone’s eyes, mouth, or nose.

Why does this all matter?

This definition of relevant particle sizes matters because if COVID-19 is transmitted substantively through small aerosols in addition to (or instead of) large droplets, it affects how we effectively control its spread.

Substantive aerosol transmission means we need more than physical distancing and face shields. It means we need better ventilation and air cleaning in buildings. And probably most importantly, it means we not only need universal masking, especially in indoor environments with other people present, but we also need those masks to be effective.

And if we need effective masks, it means we need to understand what particle sizes we are trying to protect ourselves and others from.

Are we worried about large respiratory droplets containing the virus that may be so large as to be visible to the naked eye, shot ballistically into our faces? Or are we worried about breathing small aerosols containing the virus that go undetected to the human eye?

To me, this distinction is less about “airborne” transmission, which seems to conventionally mean “highly infectious and able to transmit long distances” to the infectious disease and medical communities, versus “close contact” transmission. Rather, it is more about whether or not small respirable particles (or “aerosols”) contribute to COVID-19 transmission over any distances (including both close- and/or long-range distances). Unfortunately we still don’t even seem to know about the importance of aerosol versus droplet types of transmission in humans, let alone in animals, but lots of evidence points towards the likely importance of smaller aerosols.

Therefore, my position is that we should err on the side of caution in our use of masks for the public and we should seek to prevent the transmission of both small aerosols and large droplets. At least some of the medical community seems to agree with this idea.

With the assumption that both smaller aerosols and larger droplets may contribute substantively to transmission, my goal is to seek the most effective masks that we can reasonably acquire and comfortably use (in addition to continued physical distancing and other measures). I’m not talking about extreme measures like N95 or fan-powered respirators; rather I am seeking to better understand widely available fabric, cloth, and medical/surgical masks.

[November 2020 update: a recent summary of literature on face masks was also published in Nature in October 2020, and another in Mayo Clinic Proceedings.]

Filtration effectiveness of masks for aerosols and/or droplets

The reason that particle size is so important for masks is because the particle removal efficiency of different mask types can vary widely by (1) particle size, (2) mask material/fabric, and (3) how well a mask fits on your face.

Unfortunately, I cannot think of a single study that has thoroughly tested a wide spectrum of these factors influencing mask effectiveness across a widely representative sample of non-medical-PPE masks and materials.

Instead, most studies, including those published both pre-pandemic and during the current pandemic, have usually investigated just one or two of these factors in some depth and/or breadth.

[November 2020 update: note that the above conclusions are changing as we speak, as additional studies are being conducted to evaluate mask effectiveness using a range of methodologies. Additionally, the New York Times published a great visualization on how masks work in October 2020.]

There are also numerous epidemiological studies that have investigated the impacts of mask wearing in various populations, which can help translate from our understanding of mechanisms like filtration efficiency and mask fit to observable impacts in real human populations. However, these studies also necessarily have to gloss over some of these mechanistic details.

Here I summarize some relevant literature in these categories and then use that information to provide some fairly generalizable recommendations for masks for the general public.

I sort the literature broadly into the following categories: (1) measurements of filtration efficiency of masks (or mask materials alone) for aerosols and/or droplets, (2) measurements of mask fit effects, and (3) epidemiological investigations of mask wearers.

The filtration efficiency of masks and mask materials for both medical-PPE and non-medical-PPE style masks has been measured for a wide variety of materials subject to a wide variety of challenge aerosol sizes and types, including those ranging from ultrafine particles (i.e., < 0.1 µm) to respirable particles (i.e., 0.5-10 µm) to large visible droplets (i.e., > 1 mm). Test rigs have been setup to test materials by themselves, full masks by themselves, and full masks worn by mannikins or actual people. Some of these tests have also been setup to measure the filtration efficiency of masks used for inhalation or exhalation, or both.

There are even standardized respirator and mask testing equipment for making these types of measurements, although others have gotten creative in how they measure filtration efficiency of masks and mask materials (for better or worse), including reducing the costs of testing by implementing low cost air quality sensors and improving testing speeds by cell phone imaging.

N95 respirators are commonly used as the standard reference for comparison in these measurements, as they meet NIOSH N95 classification requirements, meaning they have a particle removal efficiency of at least 95% when challenged with ~0.18 µm size particles using a standard respirator fit test instrument. This size range is barely larger than common respiratory viruses on their own, and it corresponds to what is typically near the “most penetrating particle size” for many types of filtration media. Somewhat counterintuitively, the filtration efficiency for many media types is commonly highest for both the largest particles (e.g., > 5 µm) and the smallest particles (e.g., < 0.1 µm), but is lowest for those “Goldilocks” sizes in the middle (e.g., 0.1-1 µm). So, comparisons to N95 are a pretty extreme (and perhaps even unreasonable) target for evaluating the filtration efficiency of many cloth masks, but it’s a good standardized reference for comparison — a sort of “platinum level” of achievement that other masks strive to attain.

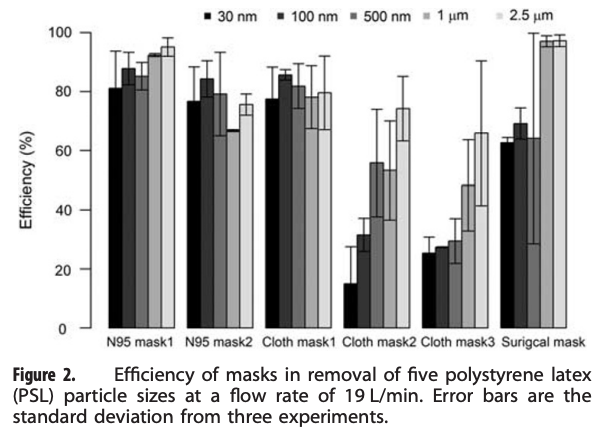

An excellent example of one of these types of studies is summarized below. Researchers aerosolized a variety of different size particles into a test chamber with a mannikin head wearing one of several types of masks, including a couple of N95 masks, a few cloth masks, and a surgical mask. They found some variability in the N95 masks, but overall their removal efficiency for all particle sizes tested was quite high (i.e., >70% typically, and increasing to >90% with larger particle sizes). N95 mask efficiencies lower than 95% were probably due to mask fit issues (i.e., air leakage around an imperfectly sealed mask). These effects are important to capture because they diminish mask efficiency compared to testing media filtration efficiency by itself.

The surgical mask they tested was just as efficient as the N95 masks for 1 µm and 2.5 µm particles, but was less efficient for particles smaller than 1 µm (~60% efficiency). Two of the three cloth masks performed worse than both the N95 and surgical masks, especially for smaller particles (i.e., only 50-60% removal of 1 µm particles but only 15-30% removal of 0.03 and 0.1 µm particles), although one of the cloth masks performed just about as well as both the N95 and surgical masks (i.e., ~80% removal for 1 µm particles). How confusing is that!

An example of a mask efficiency test using a mannikin and several sizes of particles (Shakya et al., 2017)

What is not shown above is the removal efficiency of these masks for “droplets.” However, if a fabric or fibrous media filtration device can capture 100% of 2.5 µm particles, I am generally not concerned about their ability to capture much larger “droplet-sized” particles (the typical U-shaped efficiency curve helps us here). Droplet-sized particles are so large that they are difficult to test in a setup like this — they don’t hang around long enough or in sufficient numbers to be counted.

So, my take from this study (with an admittedly very small sample size) is that if you’re concerned about ~1-2.5 µm particles — which I think is probably a reasonable proxy for virus-containing respiratory particles — then the cloth mask #1 and surgical mask performed about as well as (if not better than) the N95 respirators (taking fit into account), and I would recommend both of those masks. If you want to be on the safe(r) side and focus on the smaller size range of ~0.5 µm, then the surgical mask starts to look less convincing (but with big error bars, so it’s actually hard to say) while cloth mask #1 still looks good. I would rather not wear mask #2 and #3 given these results.

You might be wondering… what exactly are these three cloth masks and why are they different? Fortunately the study shows photos of each one:

Although no other specific details are reported, cloth masks #2 and #3 look like they may be single or double fabric layers — not unlike what is commonly available and fashionably worn in the U.S. today. Cloth mask #1 seems to be thicker and perhaps integrates multiple fabric types (it also has exhaust valves, which should be avoided or taped if they can’t be avoided).

Testing of masks and mask materials has continued, with a number of recent publications that can further guide us.

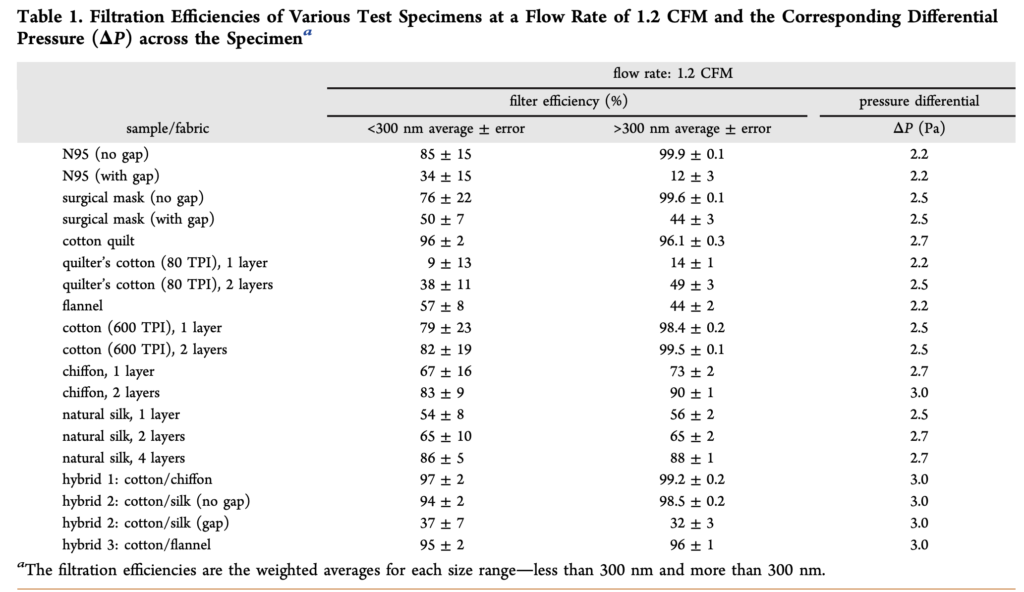

In an early study during this pandemic (in April 2020), researchers measured the filtration efficiency of several common fabrics, including “cotton, silk, chiffon, flannel, various synthetics, and their combinations,” for a range of particle sizes from ~0.01 µm (10 nm) to ~5 µm. Fabrics were tested solely as fabrics — not shaped into mask form. They were also compared to N95 and surgical mask materials. The study found a wide variety of removal efficiencies by the different fabrics, ranging from 5% to 80% for < 0.3 µm particles and from 5% to 95% for > 0.3 µm particles:

For reference, the removal efficiency of the tested N95 mask was ~85% for < 0.3 µm particles and ~99.9% for > 0.3 µm particles. The tested surgical mask performed similarly, with a removal efficiency of ~76% for < 0.3 µm particles and ~99.6% for > 0.3 µm particles. Clearly, as we have also learned from other prior testing, N95 respirator masks and surgical masks are both useful for blocking the transmission of both aerosols and droplets. What this study adds is that two layers of cotton quilt performed similarly to both of these standard reference materials, as did a high thread count cotton fabric (thread count of 600, with two layers slightly better than one), as well as two layers of chiffon. Various combinations of different fabrics such as cotton and chiffon, cotton and silk, and cotton and flannel also performed well. And again, gaps, which would ostensibly be caused by inadequate mask fit (but was just simulated herein by creating an artificial gap), greatly reduced the effectiveness for all tested materials, including surgical masks and N95 respirators.

[November 2020 update: while I have left the above table from Konda et al. 2020 in this piece because it demonstrates an early test of both fine and ultrafine particle filtration efficiency of mask materials in 2020, several researchers have also pointed out some serious methodological flaws that limit its accuracy and utility, but the broader point reminds: different materials have varying filtration efficiencies for different types and sizes of particles.]

Another early study posted in April 2020 used a respiratory fit testing device to measure the particle removal efficiency of several home-made masks as well as a type of 3M surgical mask for particles ~20 nm to ~1 µm in size (without size-resolution in this range). The tested masks included several 2-ply cotton masks, some with additional filters, and each with an additional nylon layer introduced. All of the homemade masks, which were worn on people, had lower particle removal efficiency than the surgical masks (which was ~75%), although some were within ~4% of the surgical masks while others were over up to ~60% lower. Adding a layer of nylon stocking over the masks minimized the flow of air around the edges of the masks and improved particle filtration efficiency for all masks, which brought the particle filtration efficiency for five of the ten fabric masks above the 3M surgical mask baseline.

Another study published in May 2020 tested the filtration efficiency of common fabric combinations and masks using a “fluorescent virus-like nanoparticle” challenge. This was an unconventional test setup resulting in their N95 mask reference achieving less than 50% removal efficiency, which is inconsistent with conventional aerosol testing. But if we can use this as a reference, several materials (and material configurations) were found to have removal efficiency similar to the N95 mask, with a few performing even better than the N95. Multiple layers of cotton materials acting alone, as well as in combination with other fabrics such as flannel or terry cloth or non-woven polypropylene, all had performance similar to, or better than, the N95 references in this test setup.

Others have characterized material filtration efficiency using more conventional aerosol equipment, and some have published their data online in a spreadsheet. This includes materials that can be used as filter inserts into face masks or respirators (including custom 3D printed respirators), such as household air filters coffee filters and various fabrics. In this work, researchers reported a conservative measure of filtration efficiency in the “Goldilocks” range I mentioned earlier (0.3 µm) for something like 200 materials under a variety of conditions. Coffee filters weren’t that great, but vacuum cleaner HEPA filters were excellent. Again, increased thread count on fabrics helped increase efficiency, with 600 and 1000 thread count fabrics achieving over 50% efficiency in this size range. I think one can safely extrapolate to the efficiency of 0.5 or 1 µm particles being even higher than that, even though it wasn’t explicitly tested.

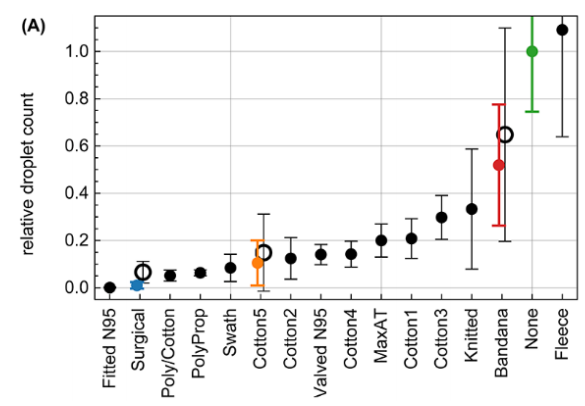

You’ve probably read about another recent study because it showed how ineffective neck gaiters are. Unfortunately, this study got a lot of press, much of which overstated and contorted its very own claims about how their testing of the neck gaiter marketplace was in no way representative of the variety of products available. The researchers reported results of exactly one (1) neck gaiter, which they report was made of ‘fleece’ (but who wears a fleece neck gaiter in the middle of the summer?). The novelty of their work really was less about the testing of 14 mask products (which is not a large sample size, as the researchers clearly note), but more about how they use cell phone imaging to characterize mask efficiency in capturing respiratory emissions from people wearing masks and placing their head into their custom designed box.

In fact, they use a respiratory emission tracking technique using smart phone imaging that, while quite intriguing and potentially quite useful, isn’t actually compared to conventional aerosol filtration techniques, so it’s really hard to say how relevant the efficiency measurements are. There is reference in the paper to a minimum detection limit of 0.5 µm, but they also report results in “droplet” nomenclature. They also show results for droplet distributions beginning around 0.1 mm in diameter (100 µm) and extending to over 1 mm (over 1000 µm), and they mention that the pixel analysis they use can’t measure the size of droplets less than 120 µm.

So…. what does this “neck gaiter study” tell us? I think it tells us about ballistic large droplet efficiency and probably not much else. If that’s useful to us, then we might want to avoid a fleece neck gaiter, a bandana, or a knitted mask because of their low removal efficiency, but rather we should select a three-layer cotton-polypropylene-cotton mask, a two-layer polypropylene mask, or either a “two-layer cotton, pleated style mask” (“Cotton5” with >90% efficiency) but perhaps not a “two-layer cotton, pleated style mask” (“Cotton3” with only ~75% efficiency). Wait, what?

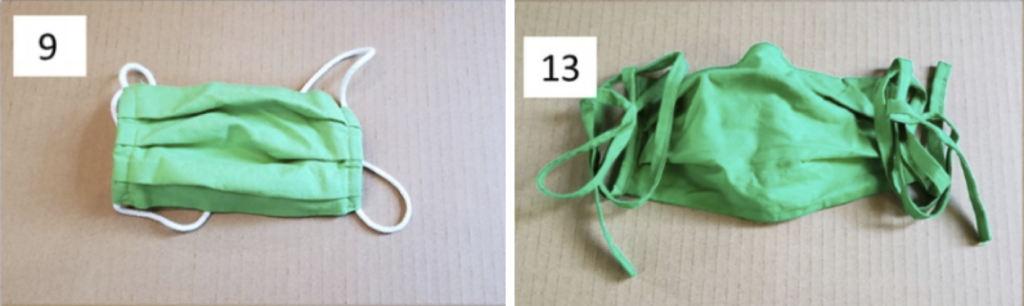

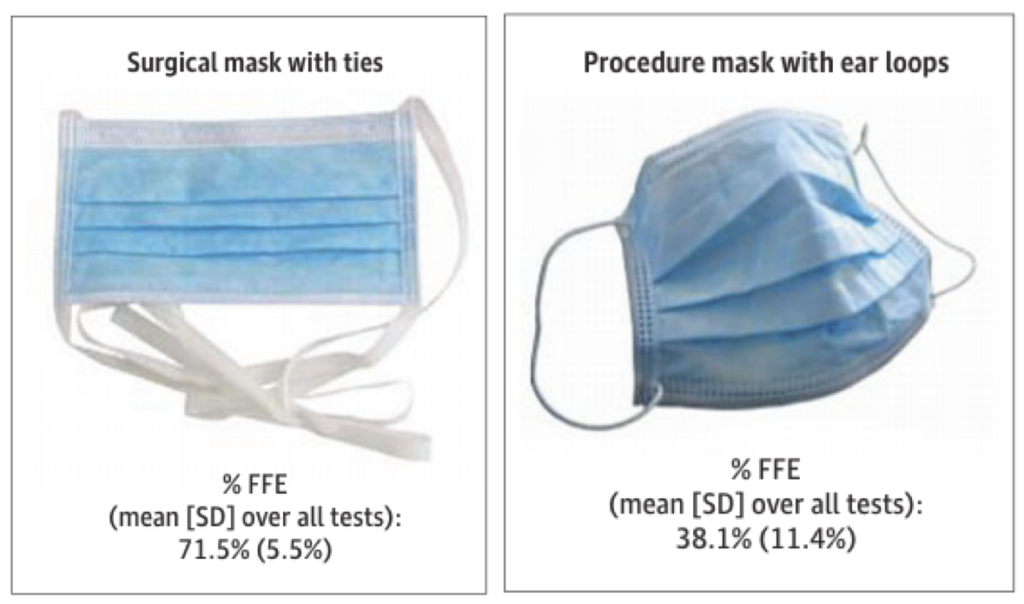

Aren’t those last two masks the same exact type? They are, nominally. In fact, they look very similar:

For whatever reasons, the “droplet” removal efficiency of these two otherwise similar masks are pretty different (although they’re at least both over 50%). Perhaps fit issues (loops versus straps) or perhaps an unknown fabric issue. And this comparison also says nothing about their aerosol removal efficiency.

So while this study garnered a lot of attention, I worry that it doesn’t provide much new information on what I’m more concerned about — aerosol filtration efficiency. I’m aware of at least one other really well-done droplet efficiency testing study, but again I’m not convinced that that’s what we need to be focusing on.

[November 2020: a recent pre-print from researchers at NIOSH provides better evidence on the neck gaiter situation, and as expected, they’re much better than the above study showed.]

Another recent study tested medical-style surgical masks and respirators similar to N95 masks worn on human subjects, each generally meant for healthcare settings, using respirator fit testing equipment, and interestingly found that surgical masks with ear loops removed less than 40% of particles smaller than 1 µm while surgical masks with ties removed over 70% of the same size particles (the N95 respirator removed >98% of particles in the tested size range, as expected). I’ve compiled a graphic of their results here:

Two surgical masks with very different particle removal efficiencies (Sickbert-Bennett et al., 2020)

This large discrepancy in surgical mask performance does not give me confidence; in fact, I think it highlights how important mask fit can really be. Otherwise, how can someone see these two products on the market, which ostensibly look the same, and understand that one might give you greater than 70% protection against respiratory aerosols while the other might give you less than 40% protection?

[November 2020 update: mask data continue to roll in, with better and better quality as well. I am providing a few additional links below. Many thanks to those researchers who continue to collect and/or communicate this information.]

Additional mask/material effectiveness studies since the original posting of this article include:

Preprint on masks and neck gaiters for source control on manikin by experienced group @NIOSH https://t.co/9ZNbyV3YKG N95 blocked 99% of aerosols, surgical mask 59%, 3-ply cloth mask 51%, neck gaiter 47% (1 layer) and 60% (2 layer), face shield 2% pic.twitter.com/o3y33SNWfe

— Linsey Marr (@linseymarr) October 7, 2020

Mask performance test results from my group. Interactive plots. Singer’s masks, gaiters, DIY, too. ??

Comparison of Cloth Mask Performance https://t.co/k0r7dYbCiM

— John Volckens (@Smogdr) September 3, 2020

Good study by aerosol scientists on material filtration efficiencies. Here are the results for 0.5-10 micron aerosols. Vacuum bags and surgical masks perform best, some fabrics are decent, others poor. https://t.co/4ILlwHZHGI pic.twitter.com/stDAT6xcy7

— Linsey Marr (@linseymarr) October 9, 2020

Mask fit effects

No matter how good a mask or mask material is at filtering relevant particles under test conditions, if the mask doesn’t fit well and seal around the user’s face, the effectiveness — especially in filtering aerosols during inhalation — can be greatly diminished.

Fortunately, mask fit has been widely studied as well (and mostly pre-pandemic). Also, as we’ve already discussed, some of the aforementioned studies incorporate mask fit effects as well.

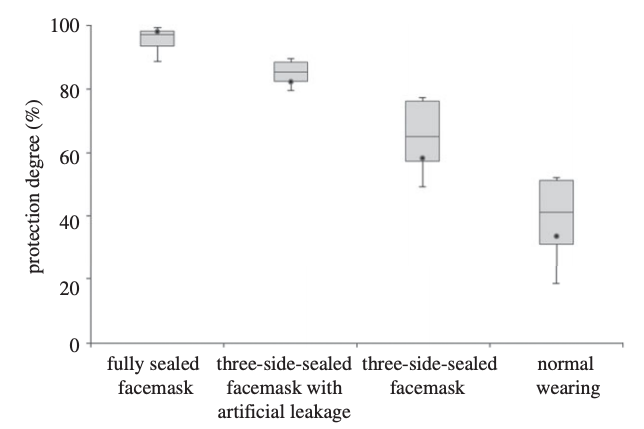

What we generally see for smaller aerosol removal efficiency by masks is something like this:

The removal efficiency of a facemask on a mannikin head increases with increasing edge-sealing (Lai et al., 2012)

Normal wearing of this particular mask (which looks to be a surgical-type mask) led to a particle removal efficiency (of small, ultrafine particles in this case) of less than 50%. Increasing amounts of sealing with adhesive tape increased removal efficiency to nearly 100%. This is an excellent example of how important mask leakage is, especially for small particles.

Those that have studied mask leakage over the years have shown that leakage can lead to 5 to 20 times more particles penetrating through otherwise decent masks; that there is a wide variability in leakage among commercially available masks of all types; that surgical masks don’t work very well for aerosols unless they have a vaseline seal (no thank you!); that nylon hosiery materials wrapped around the head can help achieve a good seal for all sorts of mask materials; and that no one seems to know how to fit a surgical mask correctly to their head, which creates enormous leaks. Oh, and that all surgical masks are not equal, as those used in hospital settings tend to have higher efficiency than those used in dental settings.

A few studies have also taken the next, rare, leap to measure viral content in respiratory emissions of sick human subjects wearing masks and not wearing masks. While small in number, these studies have generally shown that wearing surgical masks can reduce the viral content of influenza virus expelled by human subjects with influenza by a large amount in larger particles (> 5 µm) (i.e., 25-fold, on average, but with variability) and by a smaller amount in smaller particles (< 5 µm), (i.e., ~3-fold, on average, again with variability).

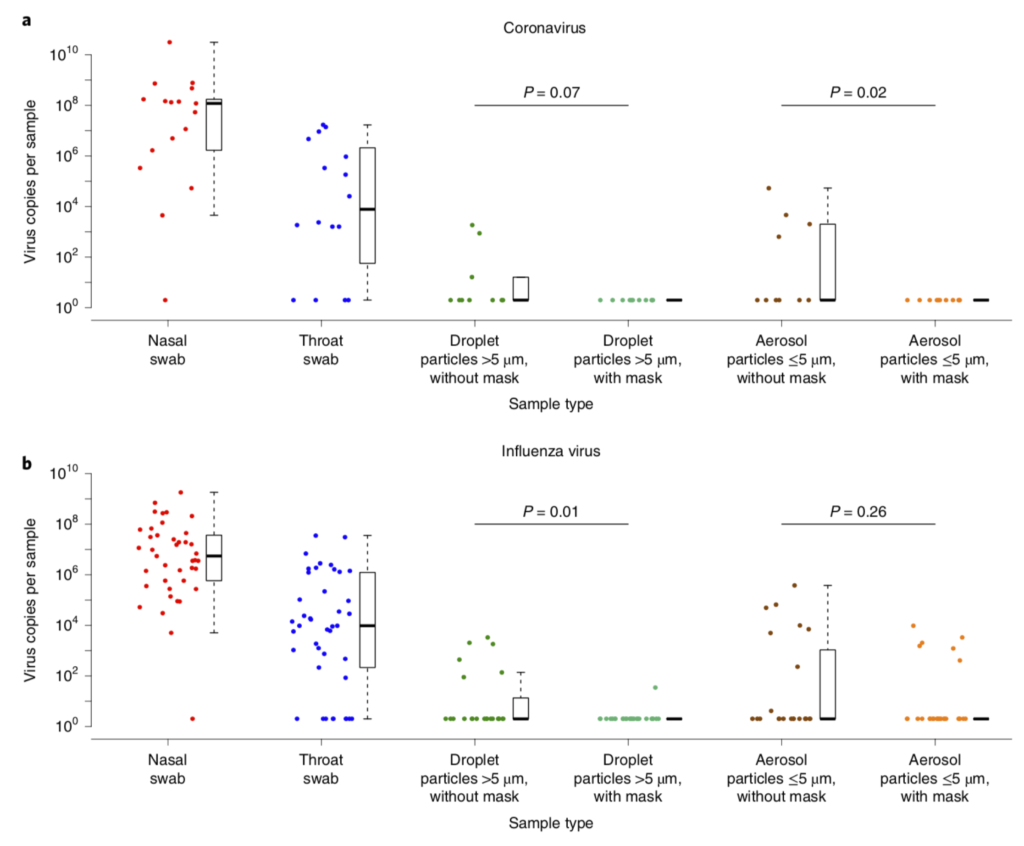

Another recent study showed similar results for coronavirus (not SARS-CoV-2) and influenza virus. Surgical masks reduced the viral content of both pathogen types in large particle (> 5 µm) emissions from infected subjects, but with some variability. However, surgical masks reduced viral content emitted from patients with seasonal (coronavirus) colds down to nothing, whereas their use led to a non-significant decrease in influenza content emitted from patients with influenza:

Efficacy of surgical masks in reducing respiratory virus shedding in droplets (>5 µm) and aerosols (<5 µm) (Leung et al., 2020)

To me, this combination of information means that surgical masks are probably quite useful, but their high potential for leakage presents some potential problems if we’re worried about smaller aerosols (which, again, I think we should be).

Epidemiology of masks

Finally, I am aware of several studies that have explored the utility of masks from an epidemiological perspective — that is, they evaluate infection rates among real people, ideally grouped into those that wore masks (or certain types of masks) and those who did not, or at least grouped into some similar classification.

When I first read the only study of which I am aware that compared the effects of cloth masks versus surgical masks on respiratory illnesses in healthcare workers using a randomized trial, my heart sank. They tracked over 1600 healthcare workers in hospitals in Vietnam and grouped them into those who wore (1) medical masks (like surgical masks), (2) cloth masks, and (3) a control group (usual practice, which included mask wearing — they did this for ethical reasons; they couldn’t tell one group to not wear masks at all). They used their assigned masks for 4 straight weeks. The group that wore cloth masks experienced a 13-fold higher risk of influenza-like illnesses compared to the medical mask group — not 13%, but 13 times higher risk!

But if you read a little further, it makes plenty of sense: the surgical/medical masks had a particle filtration efficiency of ~56% while the cloth masks had a particle filtration efficiency of only ~3%!

Although it is unclear which particle sizes were tested in this evaluation, I assume it was a standard respirator fit test for small particles (i.e., < 1 µm) because they reported N95 masks filtered >99% of particles in the same test. Regardless, it makes plenty of sense to me that a randomized control trial in healthcare workers demonstrated that wearing cloth masks with essentially 0% removal efficiency for what are probably smaller aerosols was associated with an extraordinarily higher risk of acquiring influenza like illnesses compared to a surgical mask group with ~50% removal efficiency. In fact, in a backwards way it adds some confidence to the importance of aerosol transmission of those influenza-like illnesses.

Beyond this study, other epidemiological evidence gives support to the effectiveness of mask wearing. For example, do you remember that story of a hair stylist in Missouri testing positive while seeing well over 100 clients? Well, it turns out that two hair stylists worked at the salon, both of whom were symptomatic with confirmed COVID-19, but both wore masks throughout their work days, as did the 139 clients they saw. Apparently no symptomatic secondary cases were reported among 104 clients that were later interviewed, and follow-up testing of 67 of those clients revealed no positive tests. One of the stylists reported wearing a two-layer cotton face covering while the other wore either a two-layer cotton face covering or a surgical mask. About half the clients reported wearing cloth face coverings and about half reported wearing surgical masks.

Others have compiled and synthesized the epidemiological evidence on mask-wearing and have reported, for example, that physical distancing combined with masking has reduced other respiratory illnesses across the world (with stronger effects for N95 than surgical masks). However, others have reported that medical (surgical) masks and cloth masks have not been effective in stemming the transmission of respiratory viruses in healthcare workers compared to respirators (like N95). Granted, healthcare settings may not be widely applicable to non-healthcare settings because of the differences in exposures and contact times involved, so it is difficult to generalize much from the epidemiology data on respiratory viruses broadly, and doubly difficult with SARS-CoV-2.

So where does this leave us on masks for the general public?

Simple recommendations for masks for the general public

With some synthesis of the information above, I feel reasonably comfortable providing the following advice:

- We should all be wearing masks of some kind in public, including in any indoor spaces and in crowded outdoor environments, especially in communities with significant prevalence of COVID-19

- We should strive for masks that effectively filter both larger droplets and smaller aerosols

- Your mask should be at least two layers, and the more layers the better (although too many layers could increase airflow resistance and lead to leaks around the material)

- Your mask should fit tight against your face, but should be reasonably comfortable to breath through

- Mask material is important. Consider using:

- High thread count cotton (600+ or higher) and/or multiple (~3) layers of cotton

- Combinations of different fabrics in alternating layers (e.g., high thread count cotton + chiffon, silk, nylon, or flannel)

- Nylon (or similar) wrappers placed over your mask to help it adhere close to your face

- Masks that incorporate separate filters can be effective, as long as:

- The secondary filter spans most of the width of the mask and also your mouth such that airflow and aerosols pass through the filter rather than around it

- The secondary filter is known to have a high removal efficiency, either from the database listed above or from more formal testing (such as ASTM standards or HEPA certification for face mask materials)

- The more information a mask manufacturer or seller provides, the more you can evaluate its claims against the recommendations and evidence above

- Conversely, the less information a mask manufacturer or seller provides, the less inclined I am to trust its performance

- Face shields can be use in addition to a good mask to provide additional eye protection, but they do not protect against aerosol inhalation and should not be used alone

- Masks with valves should not be used because they can expel infectious aerosols if worn by an infected person, providing a false sense of security to others

In the end, it is also worth noting that some of these considerations might be important only on the margins, but I think those margins may become important as we begin spending more time indoors with others, reopening schools and offices and heading into the fall and winter.

For example, if you and I are both wearing a mask that is 50% effective, then we each experience a 75% reduction in transmission risk: 50% of your emissions are captured by your mask, and then my mask captures another 50% of that value, or 25%, providing a 75% net reduction for both you and me.

A 50% effectiveness mask may very well have been plenty effective for limiting transmission in, say, grocery stores and outdoor farmers markets over the spring and summer, but when we return to spending 2, 4, or 8 hours a day with others in indoor spaces, some of whom may be infected, then I’ll take any additional improvement in effectiveness that I can reasonably achieve.

Footnotes:

*I consider the distinction between “mask” and “face covering” not particularly useful, but if you want to draw a distinction, I would say that “masks” usually refer to hospital or industrial grade PPE such as respirators and surgical masks, while “face coverings” usually refer to garments of a more improvised nature, typically made of cloth or other fabrics. While “face coverings” can be shaped into “masks,” they’re explicitly not considered the same as hospital or industrial grade PPE like N95 masks. However, the distinction between high quality “face coverings” that are shaped and worn like “masks” and conventional “masks” such as surgical masks can be small in terms of performance.

**Linsey Marr at Virginia Tech has a really nice slide deck on viruses in air for those interested in learning more. I also have a slide deck on this topic, as well as modes of transmission, from my 2020 summer course ENVE 576 Indoor Air Pollution.